In the wake of the recent AI summit in Paris, former Google CEO Eric Schmidt expressed a chilling concern: could AI become the next weapon of mass destruction? https://www.politico.eu/article/ex-google-boss-eric-schmidt-fears-ai-risks-could-lead-to-a-bin-laden-scenario/

Schmidt cautioned that AI falling into the wrong hands could pose extreme risks, evoking a potential “Osama bin Laden scenario.” In this scenario, terrorists or rogue states could use AI to cause harm to innocent people.

This is not mere science fiction. The International Scientific Report on the Safety of Advanced AI, published in January 2025, details the many ways AI could be misused. The report highlights AI’s potential for malicious use, including cyberattacks, the development of chemical and biological weapons, and the spread of disinformation.

The concern over AI misuse is not limited to a few experts. In a recent survey, 90% of respondents expressed concern about the potential for AI to be used for harmful purposes. This public anxiety is not unfounded. AI is a dual-use technology, meaning it can be used for both beneficial and harmful purposes.

The potential for AI to be used as a weapon of mass destruction is a grave concern. AI could be used to design and deploy cyberattacks that could cripple critical infrastructure, such as power grids and financial systems. AI could also be used to develop and deploy chemical and biological weapons that could cause mass casualties.

The threat of AI terrorism is real and growing. As AI becomes more sophisticated and accessible, the risk of it being used for malicious purposes will only increase. It is crucial that we take steps now to mitigate these risks and ensure that AI is used for good, not for harm.

What Can Be Done?

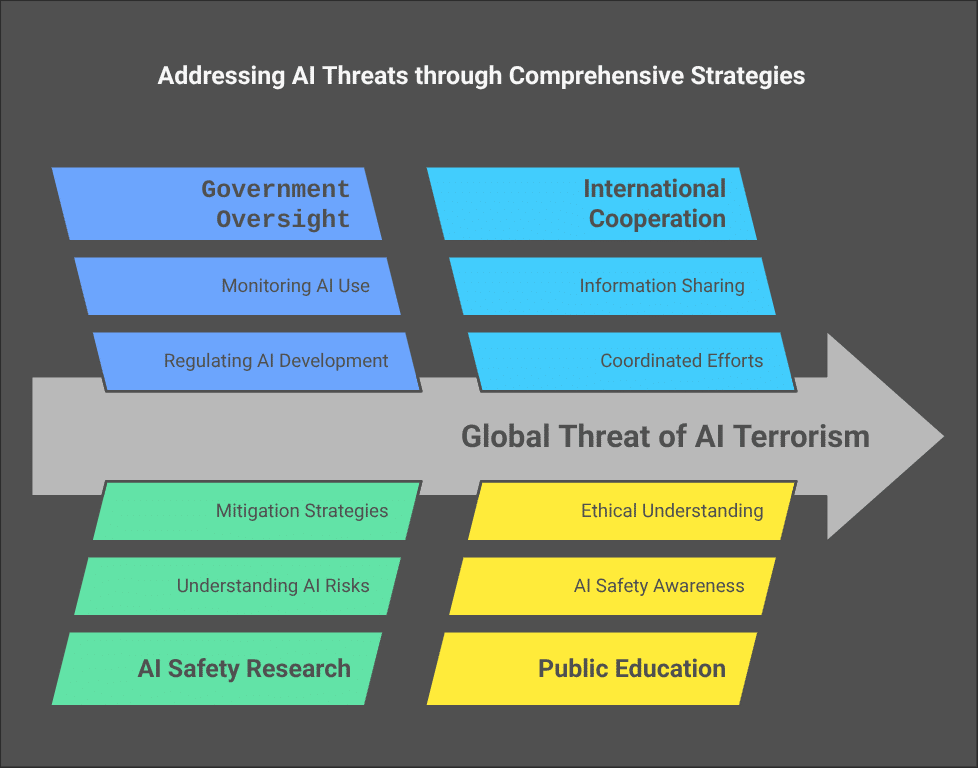

There is no single solution to the threat of AI terrorism. However, there are several steps that can be taken to mitigate the risks.

- Increase government oversight: Governments need to increase their oversight of AI development and use. This includes regulating the development and deployment of AI systems, as well as monitoring the use of AI by both state and non-state actors.

- Invest in AI safety research: More research is needed to understand the risks of AI and how to mitigate them. This includes research on AI safety, AI ethics, and AI governance.

- Promote international cooperation: International cooperation is essential to address the global threat of AI terrorism. This includes sharing information about AI risks and coordinating efforts to mitigate them.

- Educate the public: The public needs to be educated about the risks of AI and how to protect themselves from AI-enabled attacks. This includes educating people about AI safety, AI ethics, and AI governance.

The threat of AI terrorism is real and growing. However, by taking steps now to mitigate the risks, we can ensure that AI is used for good, not for harm.

Leave a Reply