The rapid advancement of AI is not just transforming industries and economies; it’s also changing the landscape of warfare. One of the most concerning trends is the use of AI to develop and deploy cyber weapons, ushering in a new dimension of conflict with potentially devastating consequences.

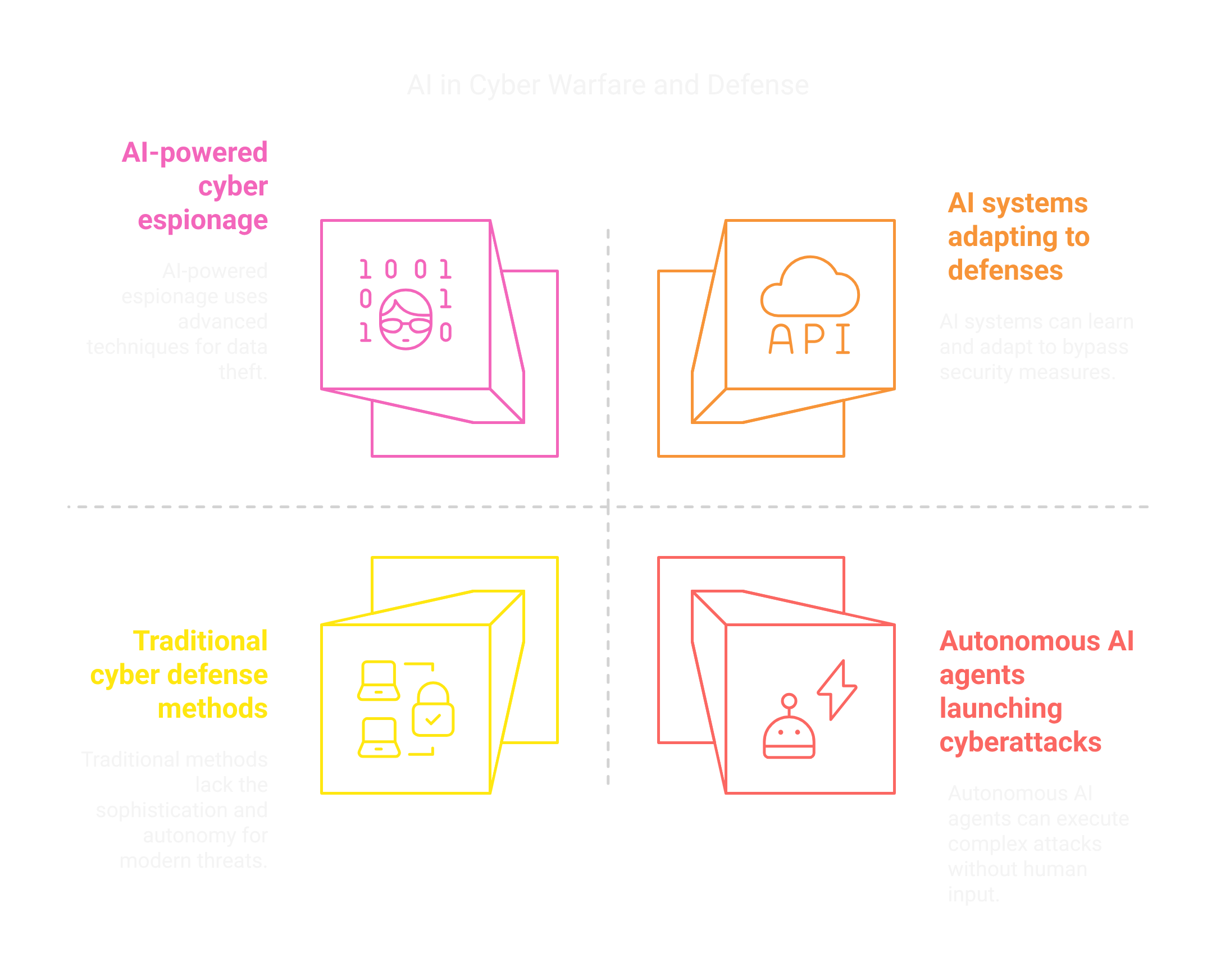

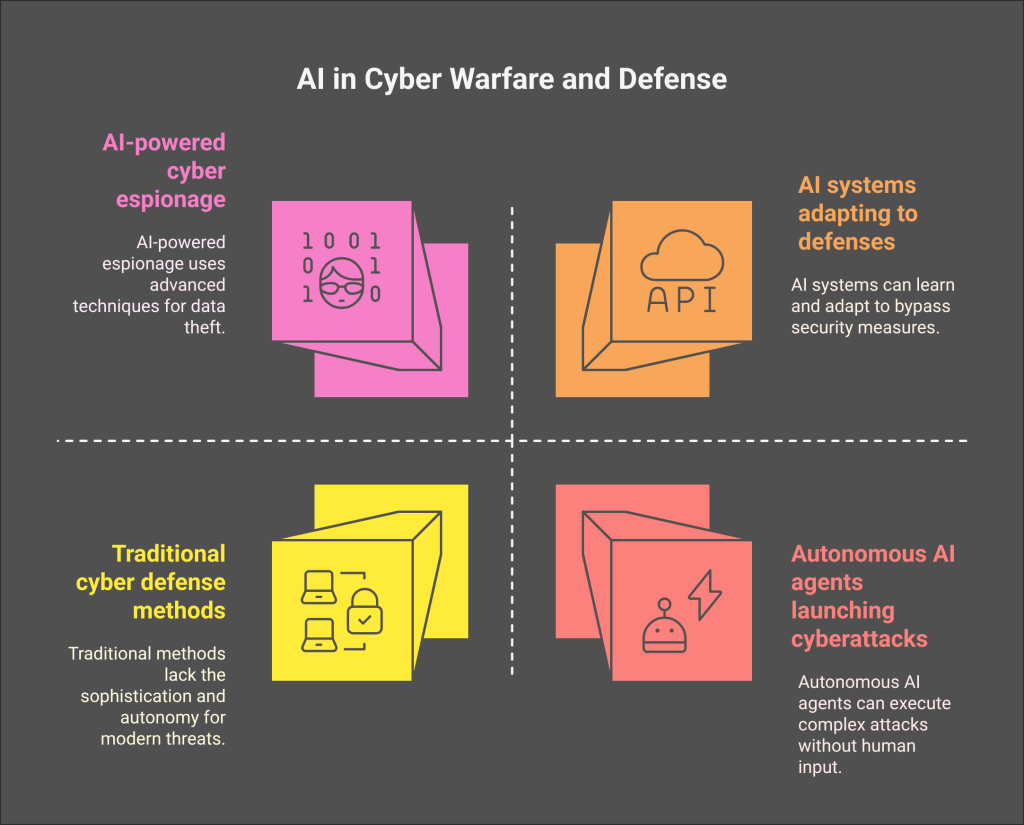

AI-powered cyberattacks can be more sophisticated, targeted, and destructive than traditional cyberattacks. AI algorithms can be used to automate the process of identifying and exploiting vulnerabilities in software and networks, making it possible to launch attacks with unprecedented speed and scale. AI can also be used to evade detection by learning to mimic normal network traffic or by adapting to changing defenses.

But there’s another layer to this emerging threat: autonomous AI agents. These agents can interact with the environment, make decisions, and take action without human intervention. In the context of cyber warfare, this means AI agents could potentially identify and exploit vulnerabilities, launch attacks, and even adapt to defenses – all without direct human control. https://www.fastcompany.com/91281577/autonomous-ai-agents-are-both-exciting-and-scary

The consequences of AI-enabled cyberattacks could be severe. Attacks on critical infrastructure, such as power grids, financial systems, and healthcare networks, could disrupt essential services and cause widespread chaos. AI-powered cyber espionage could lead to the theft of sensitive data, such as intellectual property and government secrets. And AI-enabled disinformation campaigns could be used to manipulate public opinion and sow discord.

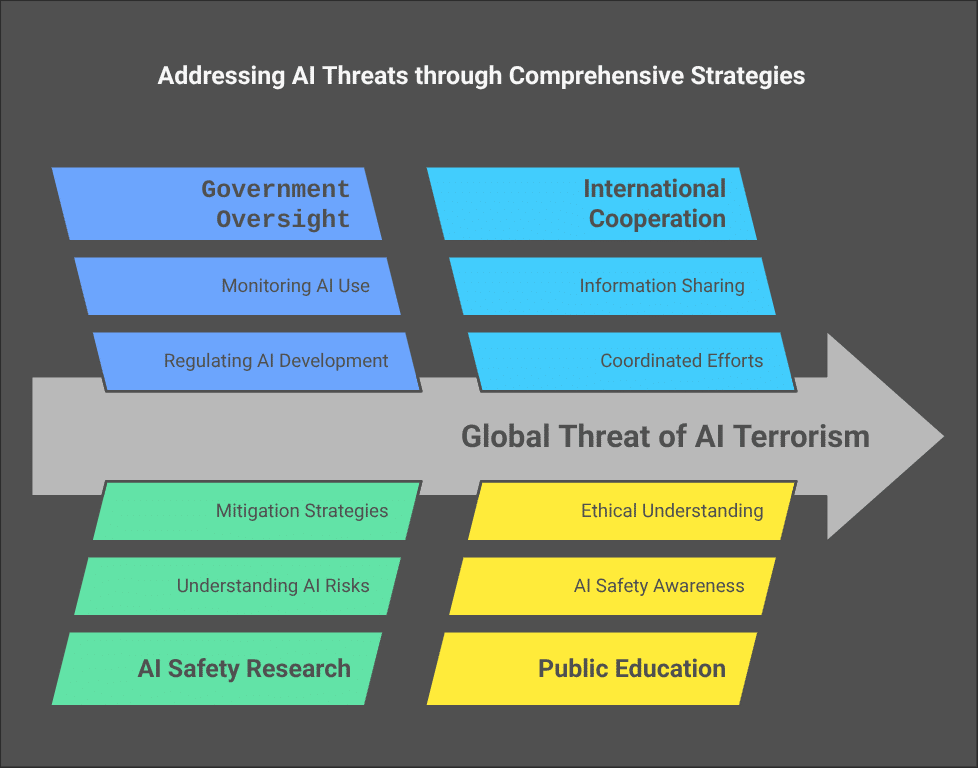

Defending against AI-powered cyberattacks, especially those launched by autonomous agents, is a major challenge. Traditional cybersecurity tools and techniques may not be effective against AI-enabled attacks. AI systems can learn to evade detection and adapt to changing defenses, making it difficult to develop effective countermeasures. The development of new AI-powered cybersecurity tools and the training of cybersecurity professionals in AI-related skills are crucial to address this challenge.

International cooperation is essential to mitigate the risks of AI-enabled cyber warfare. The development and deployment of AI cyber weapons is a global threat that requires a coordinated response. Countries need to work together to develop norms and standards for the responsible use of AI in cyber operations, as well as to share information about AI threats and vulnerabilities.

The threat of AI-enabled cyber warfare is real and growing. It is crucial that we take steps now to mitigate the risks and ensure that AI is used for good, not for harm.