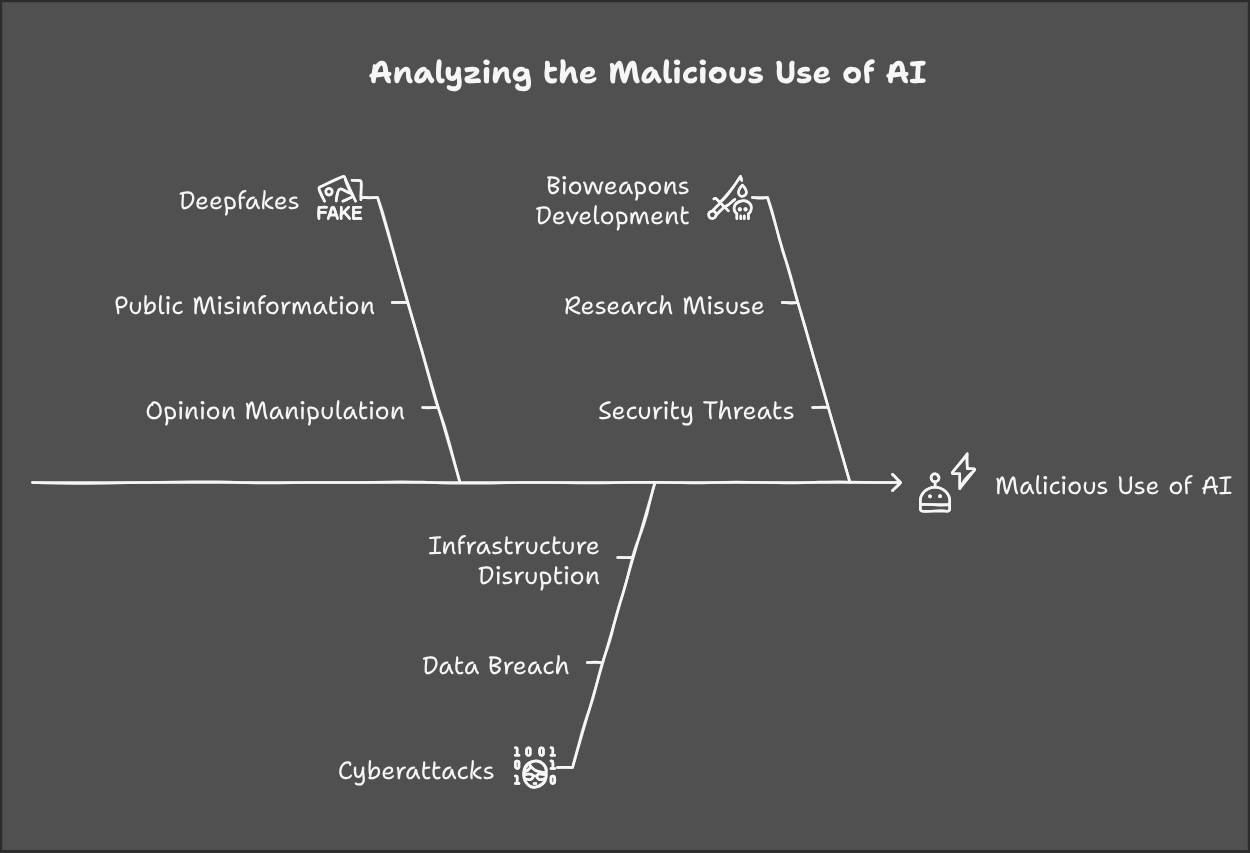

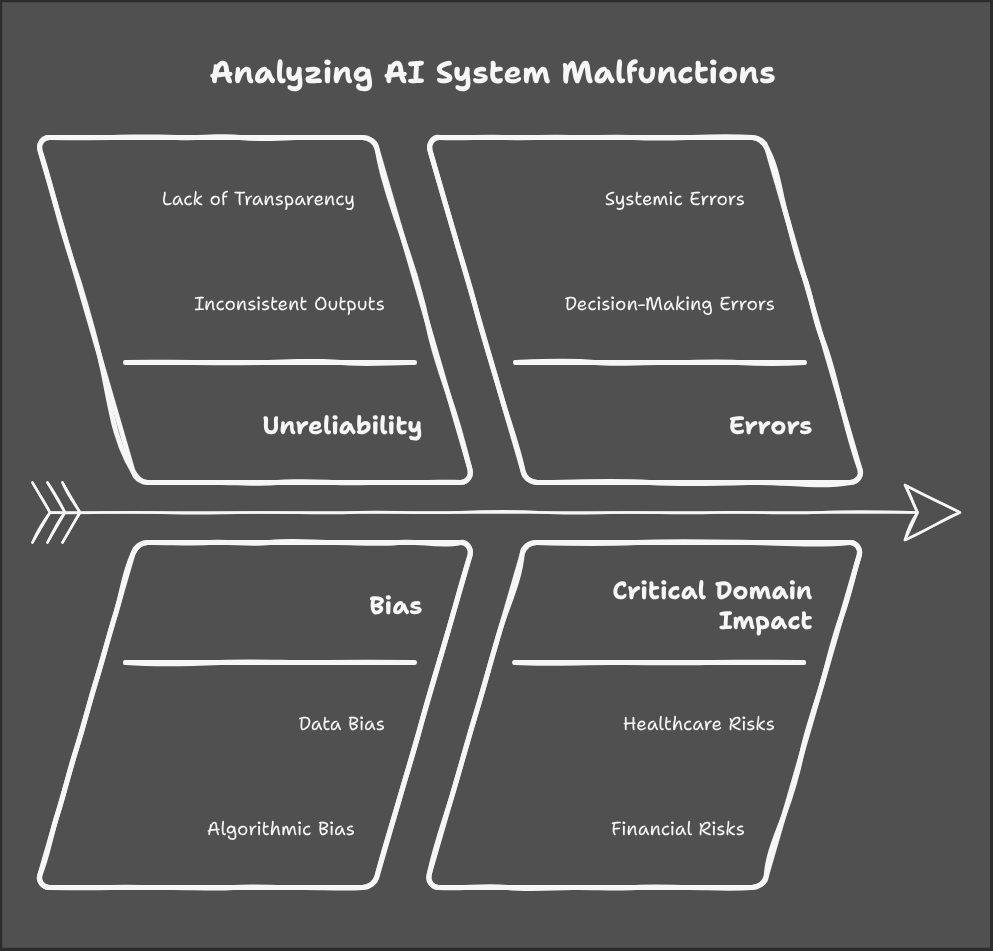

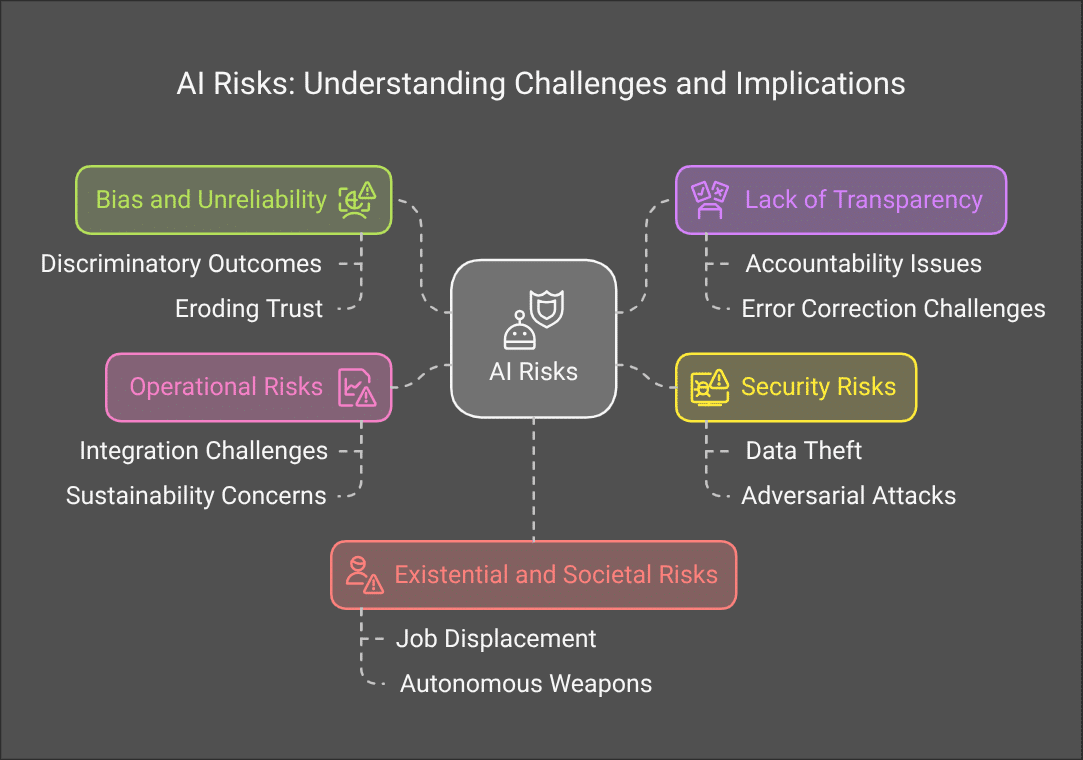

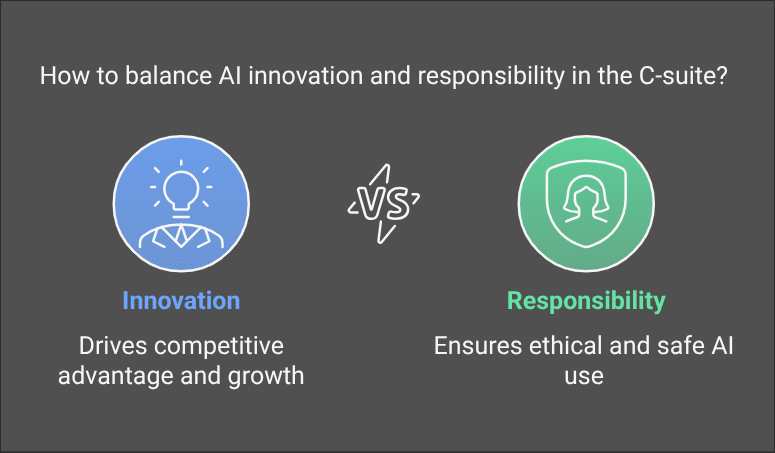

The rapid pace of AI innovation is exciting, but it also presents new challenges for safety and responsibility. As AI systems become more sophisticated, they can be used for harmful purposes, or they can fail in unexpected ways. This is why AI governance needs to catch up with the pace of innovation.

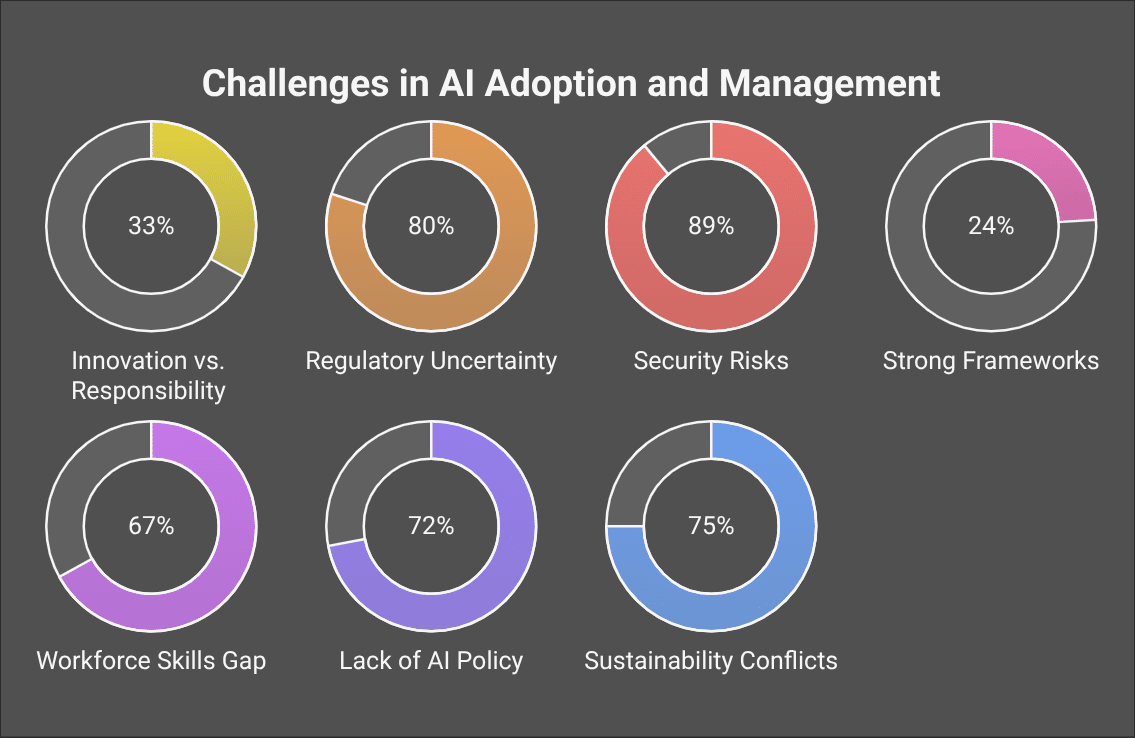

According to a recent survey of C-suite executives, there is a “responsibility gap” between the rapid pace of AI innovation and the slower development of effective governance https://us.nttdata.com/en/news/press-release/2025/february/ntt-data-report-exposes-the-ai-responsibility-crisis . Business leaders are calling for more clarity on regulation, and many are worried about the security risks of AI.

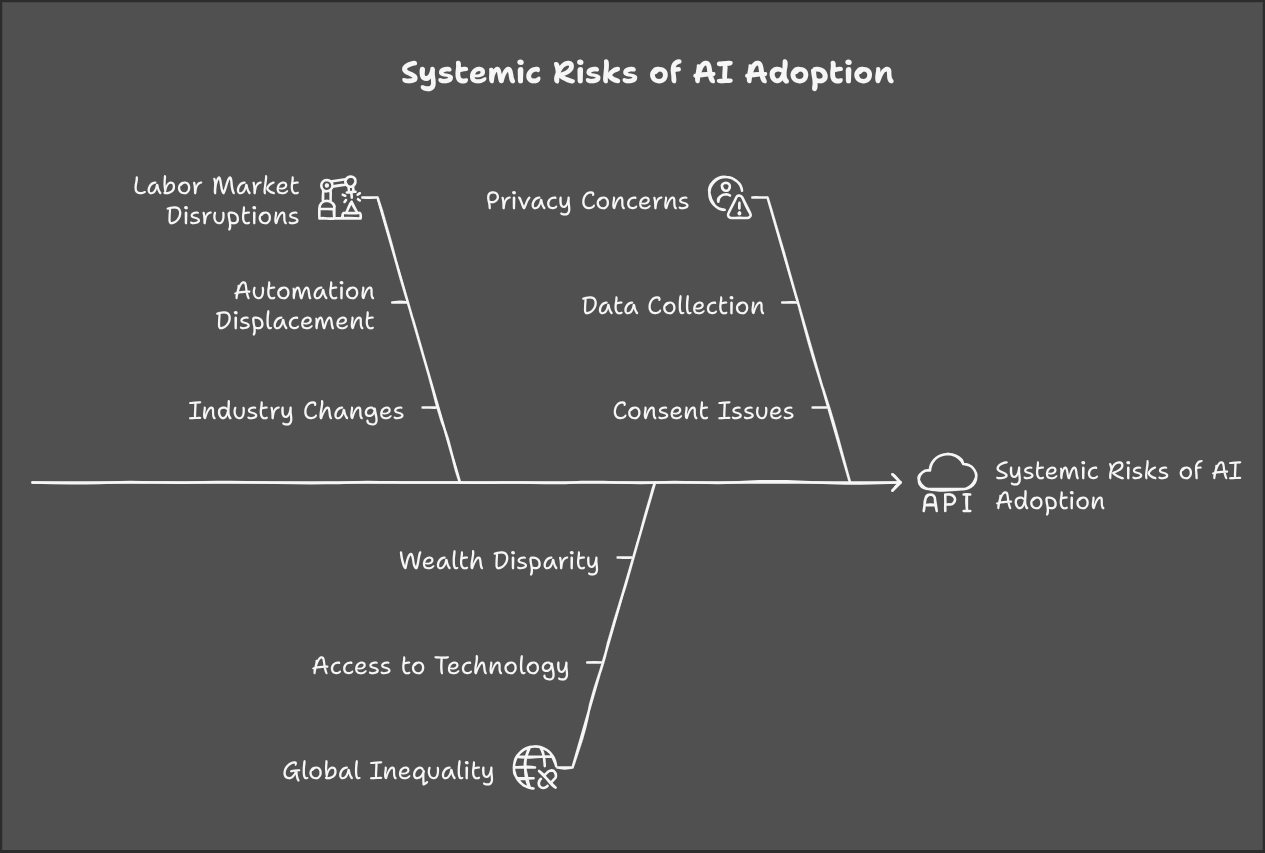

One of the biggest challenges is that there is no one-size-fits-all approach to AI governance. The risks and benefits of AI vary depending on the specific application. This means that policymakers need to take a nuanced approach, considering the specific risks and benefits of each AI system.

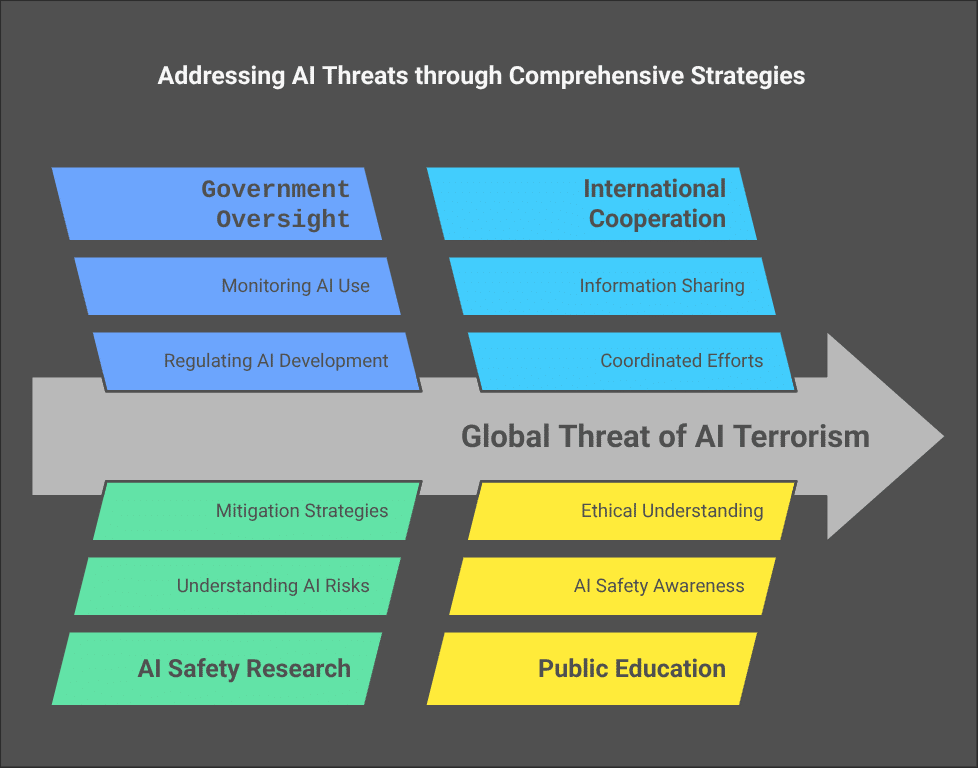

Another challenge is that AI is a global technology. This means that international cooperation is needed to develop effective governance frameworks. However, international cooperation can be difficult to achieve, especially in the face of competing national interests.

Despite these challenges, there are a number of things that can be done to improve AI governance. One is to invest in research on AI risks and risk management. This research can help policymakers to develop more effective governance frameworks. Another is to develop international standards for AI safety and responsibility. These standards can help to ensure that AI is developed and used in a way that benefits humanity.

AI is a powerful technology with the potential to transform our world in many ways. However, it is important to ensure that AI is developed and used responsibly. By taking a proactive approach to AI governance, we can help to ensure that AI benefits humanity as a whole.

What do you think? How can we close the responsibility gap and ensure that AI is developed and used responsibly?