The global AI landscape is undergoing a significant shift with the emergence of DeepSeek, a Chinese company that has released powerful open-source AI models. DeepSeek’s models, DeepSeek-V3 and DeepSeek-R1, rival the performance of leading American models like OpenAI’s ChatGPT and Anthropic’s Claude, but at a fraction of the cost. This has allowed developers and users worldwide to access cutting-edge AI technology, posing a potential threat to U.S. leadership in the field. https://www.foreignaffairs.com/china/real-threat-chinese-ai

The Open-Source Advantage

DeepSeek’s models are open-source, meaning anyone can download, modify, and build upon them. This stands in contrast to the predominantly proprietary models offered by American AI companies. Open-source software has historically fostered innovation and collaboration, allowing for rapid development and enhanced security. DeepSeek’s open-source approach has enabled it to achieve remarkable performance despite facing export controls that limit its access to advanced chips.

The Chinese Influence

While DeepSeek’s open-source models offer significant advantages, they also raise concerns about potential Chinese government influence. Beijing has implemented regulations requiring Chinese-made LLMs to align with the “core values of socialism” and avoid disseminating sensitive information. This censorship is evident in DeepSeek’s models, which avoid or provide skewed answers on topics deemed sensitive by the Chinese government.

The Chip Advantage

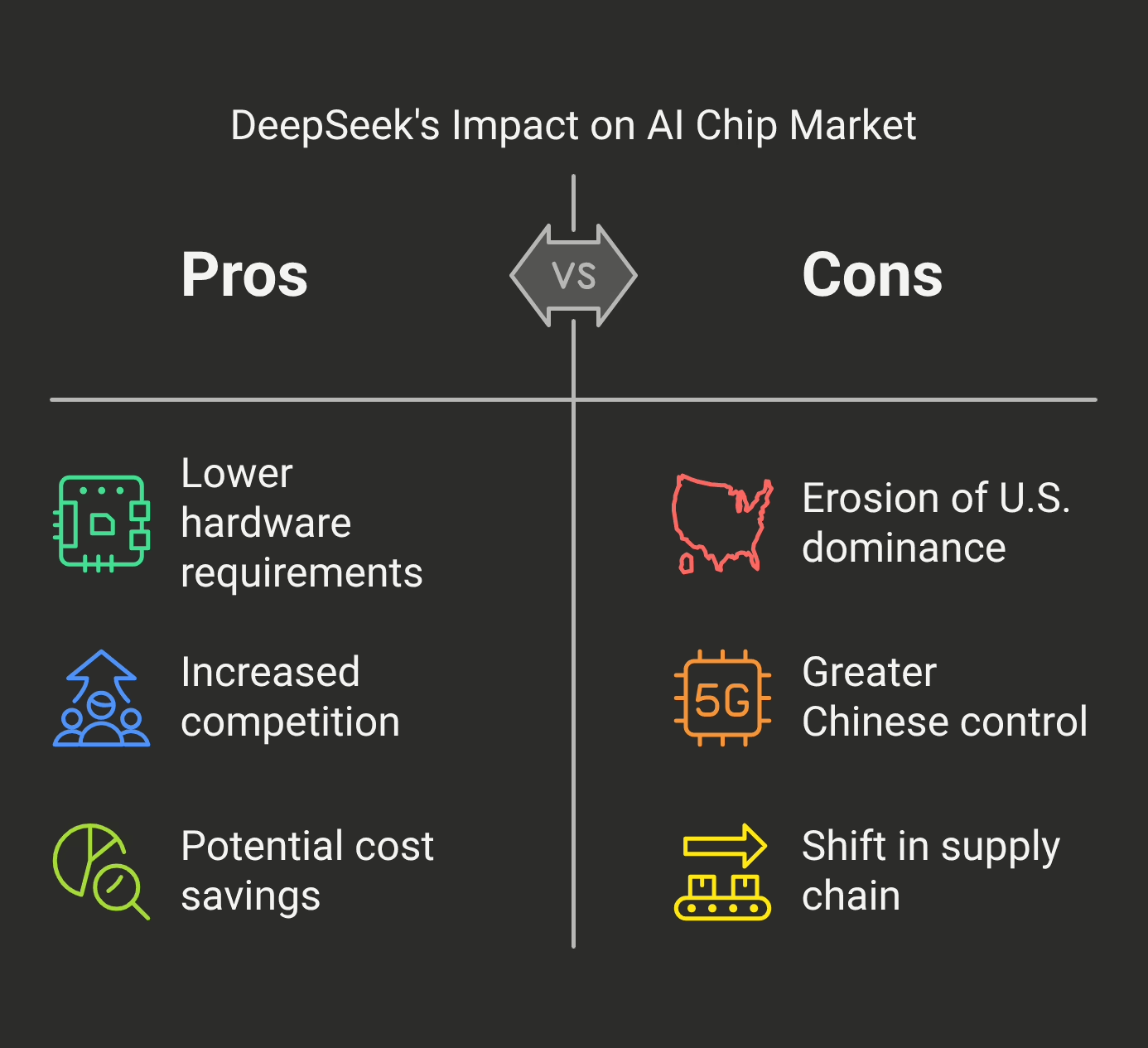

The rise of DeepSeek also has implications for the AI chip market. Currently, the U.S. dominates the AI chip market, with Nvidia’s GPUs powering the majority of AI workloads. However, DeepSeek’s ability to run its models on less advanced hardware, such as Huawei’s Ascend chips, could shift the market towards Chinese-made chips. This could potentially erode the West’s chip advantage and give China greater control over the AI supply chain.

A Call for Action

The emergence of DeepSeek highlights the need for the United States to reassess its AI strategy. While continuing to invest in frontier AI systems, the U.S. must also prioritize the development and support of open-source AI models. This includes increasing funding for open-source AI initiatives, creating incentives for companies to release open-source models, and fostering a robust open-source AI ecosystem.

The AI race is not just about technological advancement; it’s also about shaping the future of the global AI landscape. The United States must act decisively to ensure that it remains a leader in the AI race and that the development and deployment of AI technologies align with democratic values.