Artificial intelligence (AI) is rapidly changing the world around us, but this rapid progress comes with significant risks, particularly AI risks. Organizations, individuals, and society as a whole need to be aware of these AI risks and take steps to mitigate them.

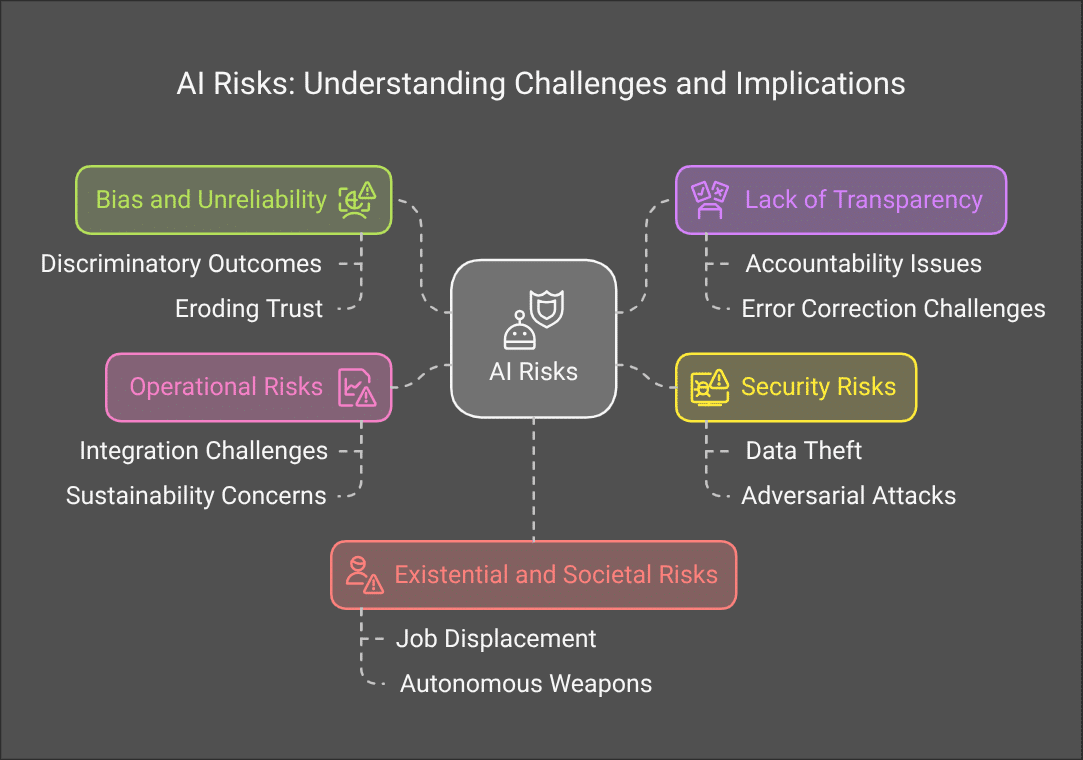

Here are five key AI risks, based on information from the “International AI Safety Report”:

Understanding AI risks is essential for everyone in today’s technology-driven landscape.

Understanding AI Risks

- Bias and unreliability in data and models: AI systems can produce inaccurate or biased results due to the data they are trained on. This can lead to discriminatory outcomes and erode trust in AI. For example, an AI system used for loan applications might unfairly discriminate against certain groups if the training data reflects historical biases.

- Lack of transparency and explainability: Many AI systems are “black boxes,” making it difficult to understand how they make decisions. This lack of transparency can hinder accountability and make it harder to identify and correct errors or biases. For instance, if a self-driving car makes an accident, it might be difficult to determine why without a clear understanding of the AI’s decision-making process.

- Security risks and vulnerability to attacks: AI models can be vulnerable to various security threats, including theft, manipulation, and adversarial attacks. Attackers could exploit these vulnerabilities to steal sensitive data, disrupt critical systems, or spread misinformation. For example, hackers could manipulate an AI-powered medical diagnosis system to provide false diagnoses, putting patients at risk.

- Operational risks: AI systems can experience performance degradation over time due to changes in data or relationships between data points. Integration challenges with existing IT infrastructure, sustainability concerns, and a lack of oversight can also create problems. For example, an AI system used for fraud detection might become less effective as new fraud patterns emerge, potentially leading to financial losses.

- Existential and societal risks: Some experts believe that advanced AI could pose existential risks to humanity, while others are concerned about its potential to exacerbate existing societal problems. AI-driven automation could lead to job displacement, and AI systems could be used to manipulate individuals or spread misinformation. There are also concerns that AI could be used to develop autonomous weapons systems or to create other dangerous technologies.

These AI risks highlight the urgent need for robust risk management frameworks and mitigation strategies. Organizations and policymakers need to proactively address these AI risks to ensure that AI is developed and used responsibly.

To learn more about these risks and potential mitigation strategies, you can check out the following resources:

- International AI Safety Report: https://www.gov.uk/government/publications/international-ai-safety-report-2025

- OECD AI Policy Observatory: https://www.oecd.org/en/topics/policy-issues/artificial-intelligence.html

By staying informed and taking a proactive approach to risk management, we can harness the benefits of AI while mitigating its potential harms. Sources and related content

Leave a Reply