The integration of Artificial Intelligence (AI) into nuclear command and control systems presents a complex challenge, demanding a nuanced approach to risk management that goes beyond the simplistic “human-in-the-loop” model. https://europeanleadershipnetwork.org/report/ai-and-nuclear-command-control-and-communications-p5-perspectives/

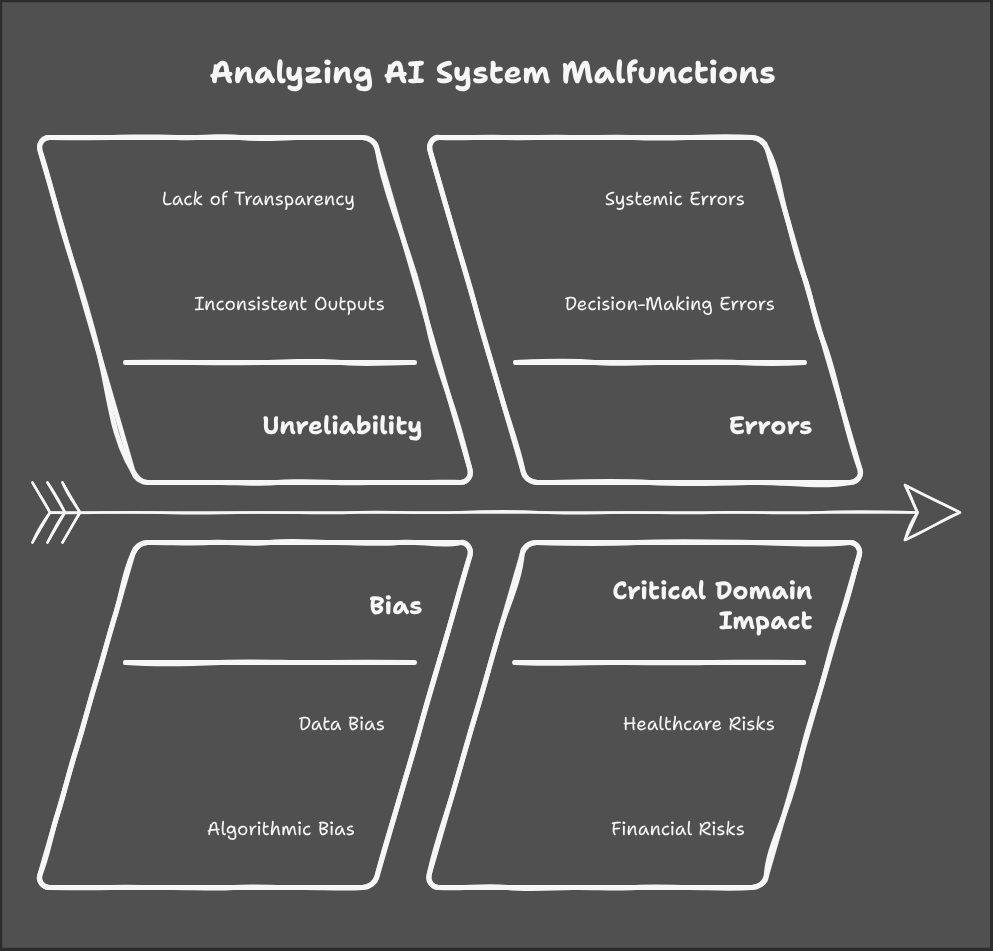

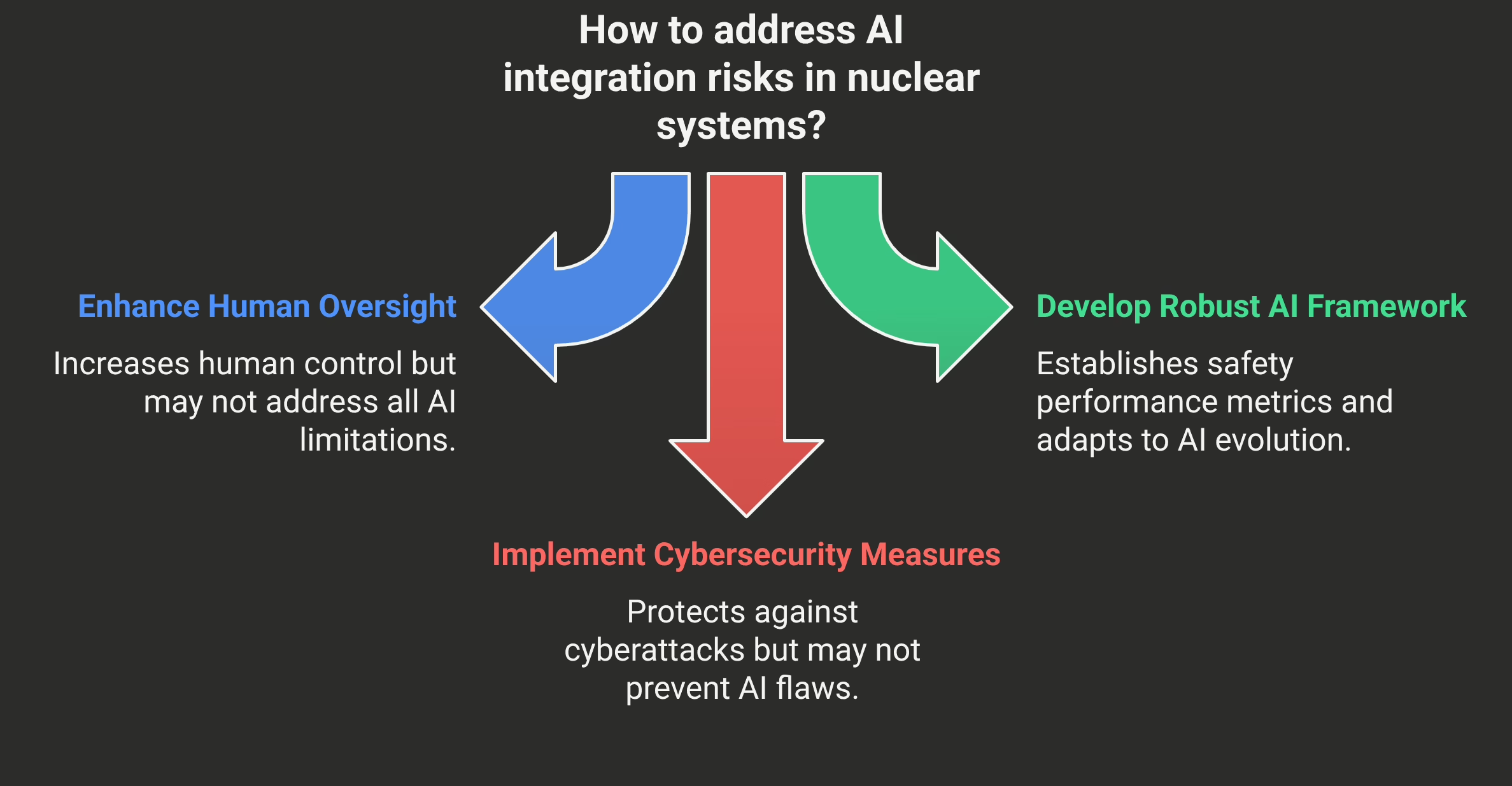

While maintaining human oversight is crucial, it is not a foolproof safeguard against unintended escalation. The limitations of current AI models, such as hallucinations, opacity, and susceptibility to cyberattacks, can lead to flawed predictions, skewed decision-making, and compromised system integrity. Furthermore, the rapid evolution of AI means that new, unforeseen risks may emerge, making a static “human-in-the-loop” approach insufficient. https://warontherocks.com/2024/12/beyond-human-in-the-loop-managing-ai-risks-in-nuclear-command-and-control/https://warontherocks.com/2024/12/beyond-human-in-the-loop-managing-ai-risks-in-nuclear-command-and-control/

Instead, a more robust framework is needed, one that focuses on the overall safety performance of the AI-integrated system. This framework should establish a quantitative threshold for the maximum acceptable probability of an accidental nuclear launch, drawing lessons from civil nuclear safety regulations.

A performance-based approach, similar to that used in civil nuclear safety, would define specific safety outcomes without prescribing the exact technological means to achieve them. This would allow for flexibility in adapting to evolving AI capabilities while ensuring that the risk of accidental launch remains below an acceptable level.

The adoption of probabilistic risk assessment techniques, which quantify the likelihood of various accident scenarios, would provide a more comprehensive understanding of the risks involved in AI integration. This quantitative approach would enable policymakers to make informed decisions about the acceptable levels of AI integration in nuclear command and control systems.

The international community must move beyond mere declarations of human control and engage in a deeper discussion about AI safety in the nuclear domain. This discussion should focus on establishing quantifiable safety objectives and developing a performance-based governance framework that can adapt to the evolving risks of AI.