The latest International AI Safety Report is a must-read for anyone working in risk management. https://www.gov.uk/government/publications/international-ai-safety-report-

This landmark report, compiled by 96 AI experts worldwide, offers a stark assessment of the evolving AI landscape and its potential impact on society.

Here are the key takeaways that risk professionals need to know:

AI is evolving at an unprecedented pace. Just a few years ago, AI models struggled to generate coherent text. Today, they can write complex computer programs, create photorealistic images, and even engage in nuanced conversations. This rapid progress shows no signs of slowing down, with experts predicting further significant advancements in AI capabilities in the coming years.

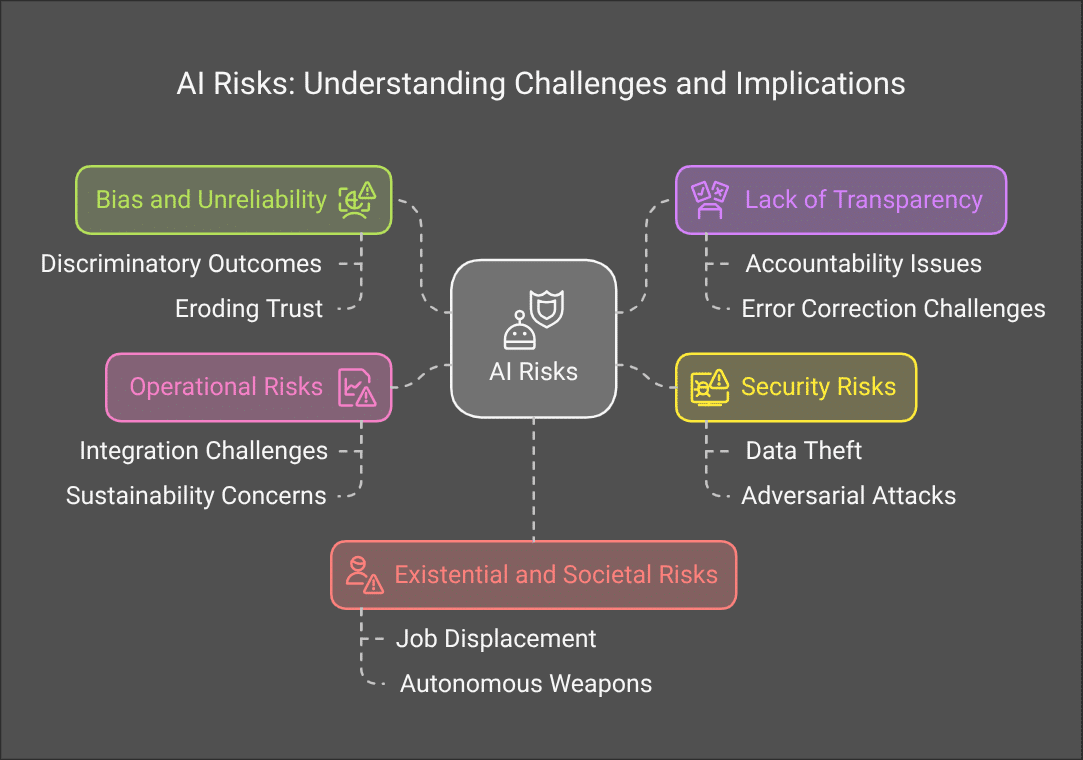

This rapid evolution brings new and complex risks. The report highlights several existing and emerging risks associated with AI, including:

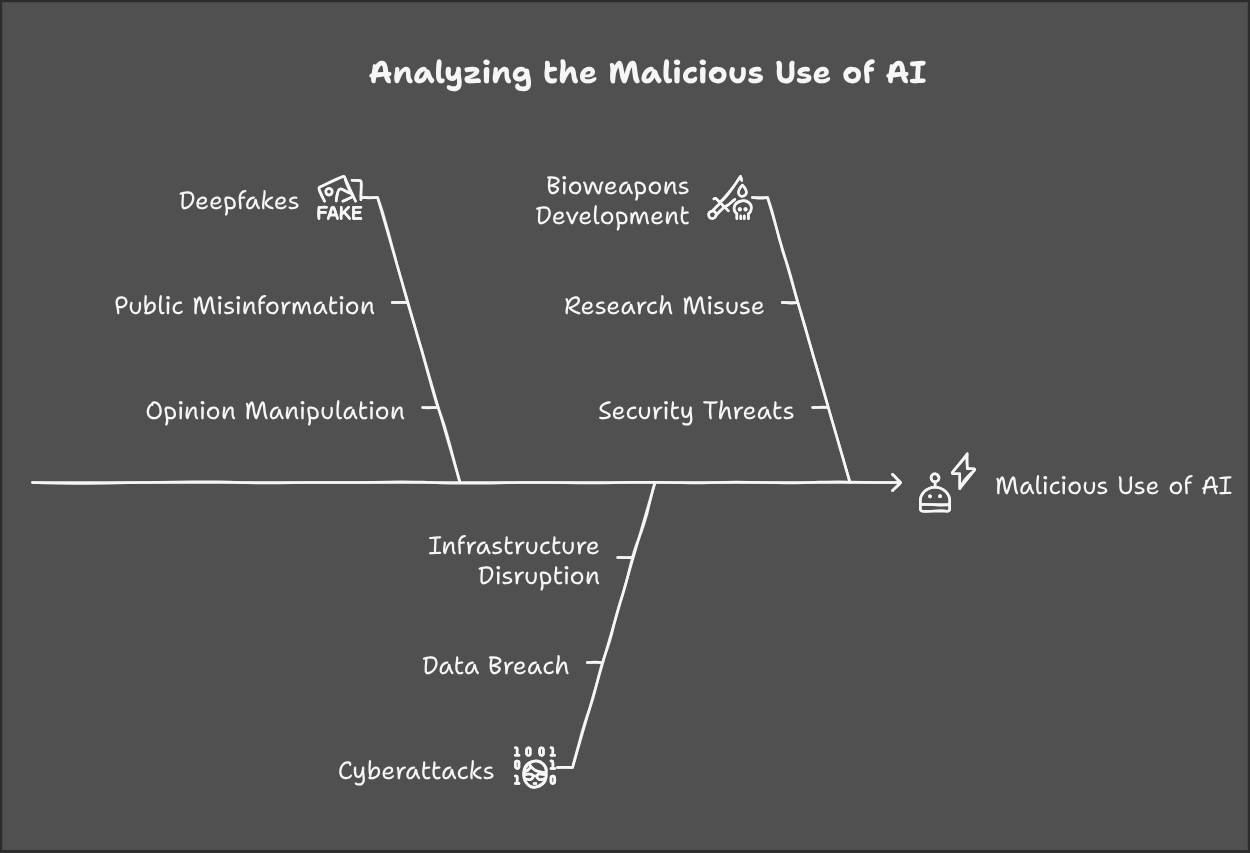

- Malicious use: AI can be used to create harmful deepfakes, manipulate public opinion, launch sophisticated cyberattacks, and even facilitate the development of bioweapons.

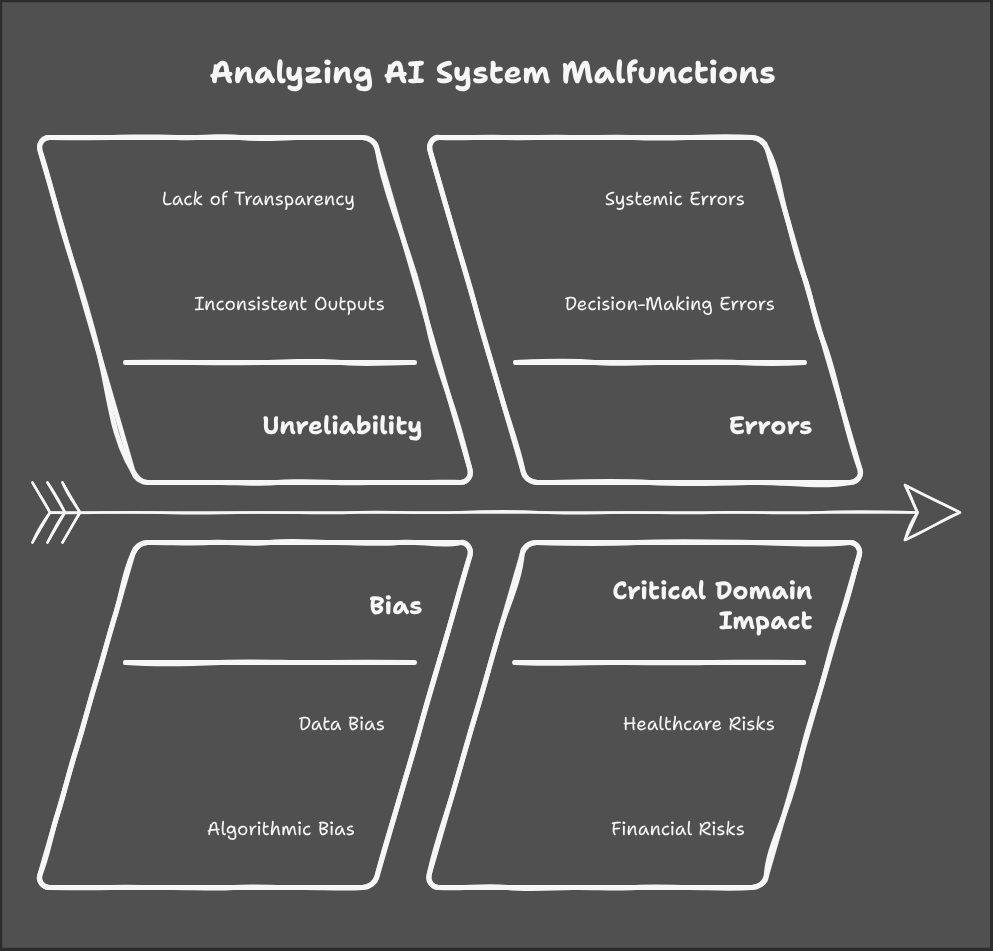

- Malfunctions: AI systems can be unreliable, biased, and prone to errors, potentially leading to harmful consequences in critical domains like healthcare and finance.

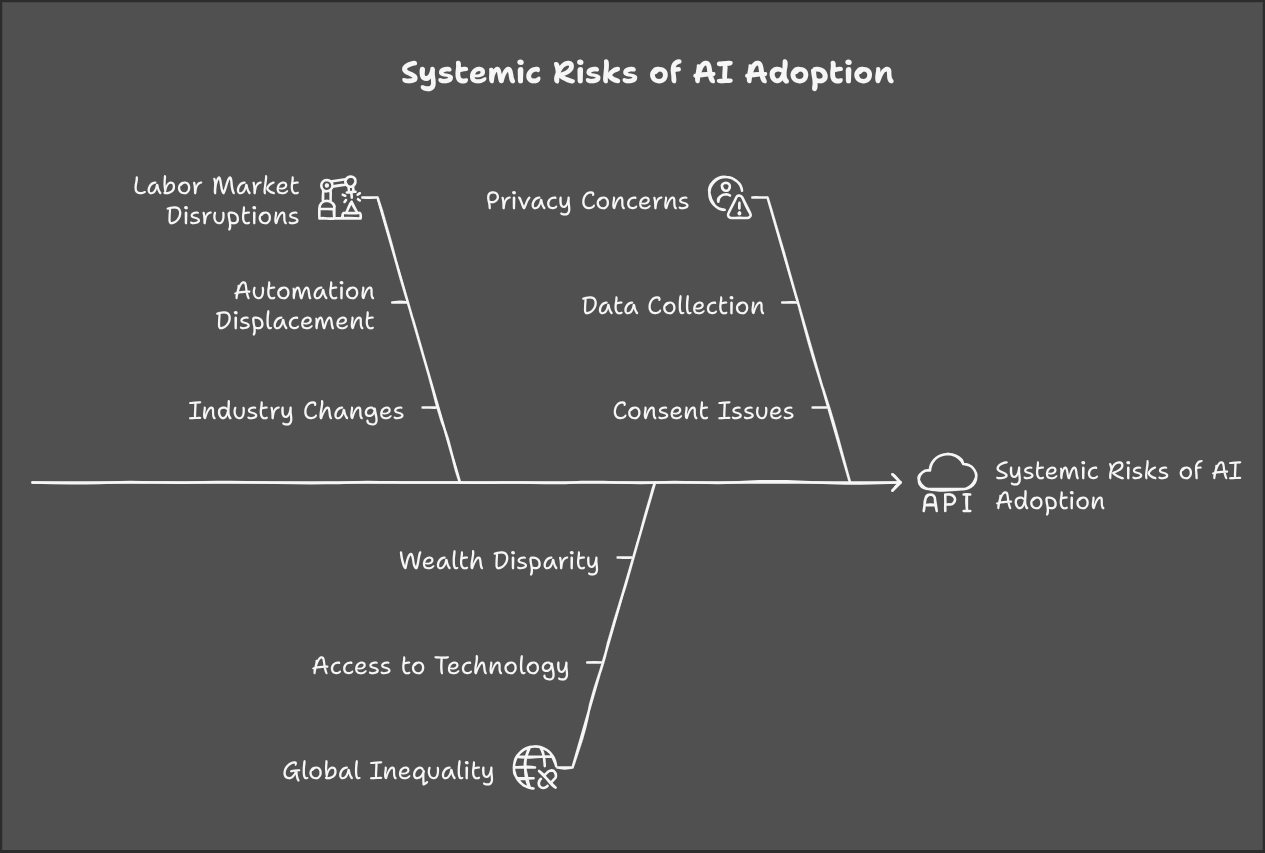

- Systemic Risks: The widespread adoption of AI could lead to significant labor market disruptions, exacerbate global inequalities, and erode privacy.

Risk management is struggling to keep up. The report emphasizes that current risk management techniques are often insufficient to address the complex and evolving risks posed by AI. This “evidence dilemma” requires policymakers and risk professionals to make difficult decisions with limited information and often under pressure.

The need for a proactive and comprehensive approach. The report calls for a more proactive and comprehensive approach to AI risk management, involving:

- Increased investment in AI safety research.

- Greater collaboration between AI developers, policymakers, and civil society.

- The development of robust risk assessment frameworks and mitigation strategies.

The International AI Safety Report serves as a wake-up call for risk professionals. It’s a reminder that the AI revolution is not just about technological advancement, but also about managing the risks that come with it. By understanding the key takeaways of this report and embracing a proactive and comprehensive approach to risk management, we can help ensure that AI benefits society while minimizing its potential harms.